E-Learning Maturity Model

Practice Assessments

Practices

Each process is further broken down within each dimension into practices that are either essential (listed in bold type) or just useful (listed in plain type) in achieving the outcomes of the particular process from the perspective of that dimension. These practices are intended to capture the key essences of the process as a series of items that can be assessed easily in a given institutional context. The practices are intended to be sufficiently generic that they can reflect the use of different pedagogies, technologies and organisational cultures. The eMM is aimed at assessing the quality of the processes - not at promoting particular approaches.

Assessments of Capability

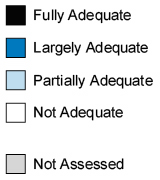

When conducting an assessment each practice is rated for performance from 'not adequate' to 'fully adequate' (Figure 1). The ratings at each dimension are done on the basis of the evidence collected from the institution and are a combination of whether or not the practice is performed, how well it appears to be functioning, and how prevalent it appears to be. The practices have been deliberately designed to minimise variation in determining capability but this is necessarily an exercise of judgement and assessors are encouraged to work with an experienced assessor before conducting their own capability assessments. It is also very useful to note what evidence underpins the assessment and to have more than one assessor work independently and then make the final determination jointly.

Figure 1: eMM Capability Assessments (based on Marshall and Mitchell, 2003)

Evidence should be collected primarily from examples of courses actually being delivered by the institution. This ensures that the assessment is being made on the basis of actual performance, not intended or idealised performance. The courses used should be representative rather than exceptional (either good or bad). This does not mean randomly selected, as it is generally unhelpful to assess courses that are not representative of the institution (see below for further discussion about the selection of courses).

Naturally this evidence needs to be supplemented by materials from the enrolment packs, websites and formal documents, including policies, procedures and strategies. If an external assessment is being undertaken (as is strongly recommended) then the initial assessment evidence should be verified with knowledgable insiders, although they should not influence the capability determination itself.

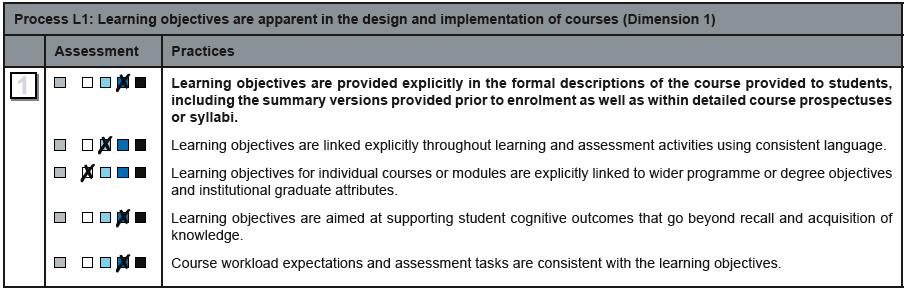

Figure 2: Example eMM Capability Assessment

Once each practice has been assessed, the results are averaged as a rating for the given dimension of the process. Practices listed in bold should be used primarily to make this summary assessment, with the other practices used when making a choice between two possible assessments. In the example shown in Figure 2, the assessment for dimension one would be Largely Adequate, although the two practices with lower assessments indicate where additional attention should be focused. A purely mechanical process with a mathematical summation has been deliberately avoided in order to provide enough flexibility within the model for differences of pedagogy, technology, organisational culture and national culture.

It should be noted that experience of applying this type of assessment in the field of software engineering and with the first version of the eMM suggests that most, if not all, institutions initially assessed will show a low level of capability for the processes selected (SEI, 2004; Marshall, 2005). This is not surprising as one of the drivers for the model in the first place is the widely held perception that e-learning could be implemented more effectively and efficiently in most institutions.

Institutional Context

This discussion of the methodology uses the word 'institution' to indicate the level at which assessments are conducted. It is, however, entirely possible and useful to conduct assessments using other organisational levels or forms of grouping courses. Potentially this could include:

- Faculties or Colleges of an institution

- Different campuses of an institution

- Different modes of delivery (distance versus face-to-face)

- Different forms of support and course development/creation (centrally versus ad-hoc)

In these cases the courses used to find evidence of capability would be selected as being representative of the institutional aspect of interest.

Modifying the eMM to reflect local concerns

It is entirely possible to extend or modify the eMM to reflect issues of particular concern to a given sector or context, such as legislative requirements, e-learning practices required by accreditation bodies, or contextual factors arising from local experience or culture. Normally this should be done at the level of the practices as this would then still allow for comparison at the summary process level.

If a particular aspect of e-learning capability is identifiedÑalong with evidence to support its effectiveness - that needs to be reflected as a process then please contact the author with the details so that it can be accomodated or included in future versions of the eMM.